|

Hello!

I am a 3rd year PhD student at Seoul National University, Computer Science department, Machine Learning Lab, advised by Hyun Oh Song.

|

|

|

|

|

|

|

|

|

|

|

Jang-Hyun Kim, Jinuk Kim, Sangwoo Kwon, Jae W. Lee, Sangdoo Yun, Hyun Oh Song

NeurIPS 2025 Oral Presentation (77/21575=0.35%)

Paper |

Code |

Project page |

Bibtex

ICML 2025 ES-FoMo-III Workshop |

|

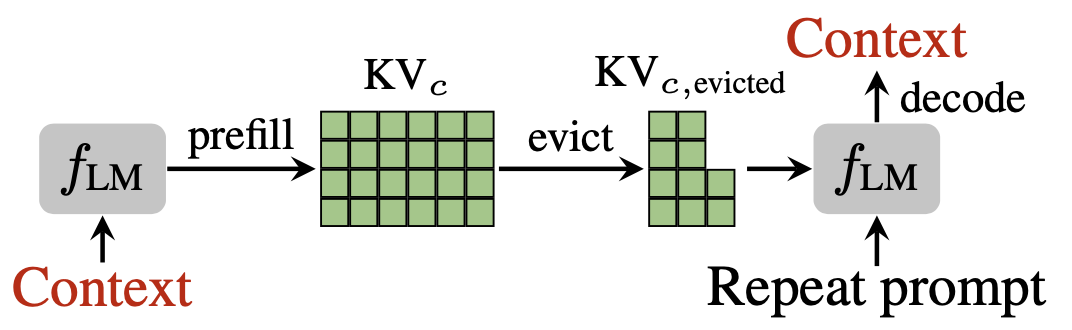

Jinuk Kim, Marwa El Halabi, Wonpyo Park, Clemens JS Schaefer, Deokjae Lee, Yeonhong Park, Jae W. Lee, Hyun Oh Song

ICML 2025

Paper |

Code |

Project page |

Poster |

Bibtex

|

|

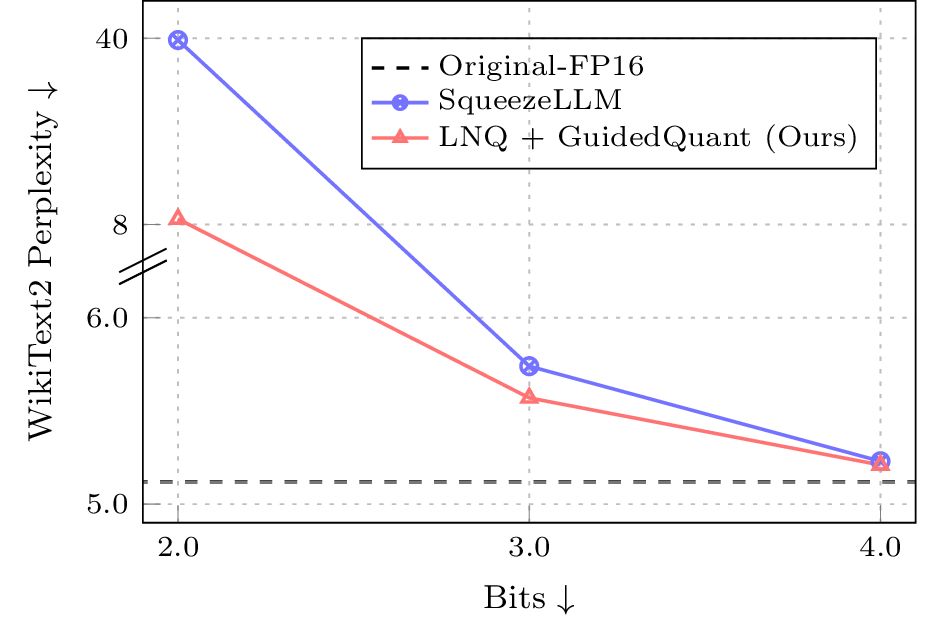

Jinuk Kim, Marwa El Halabi, Mingi Ji, Hyun Oh Song

ICML 2024

Paper |

Code |

Project page |

Poster |

Bibtex

|

|

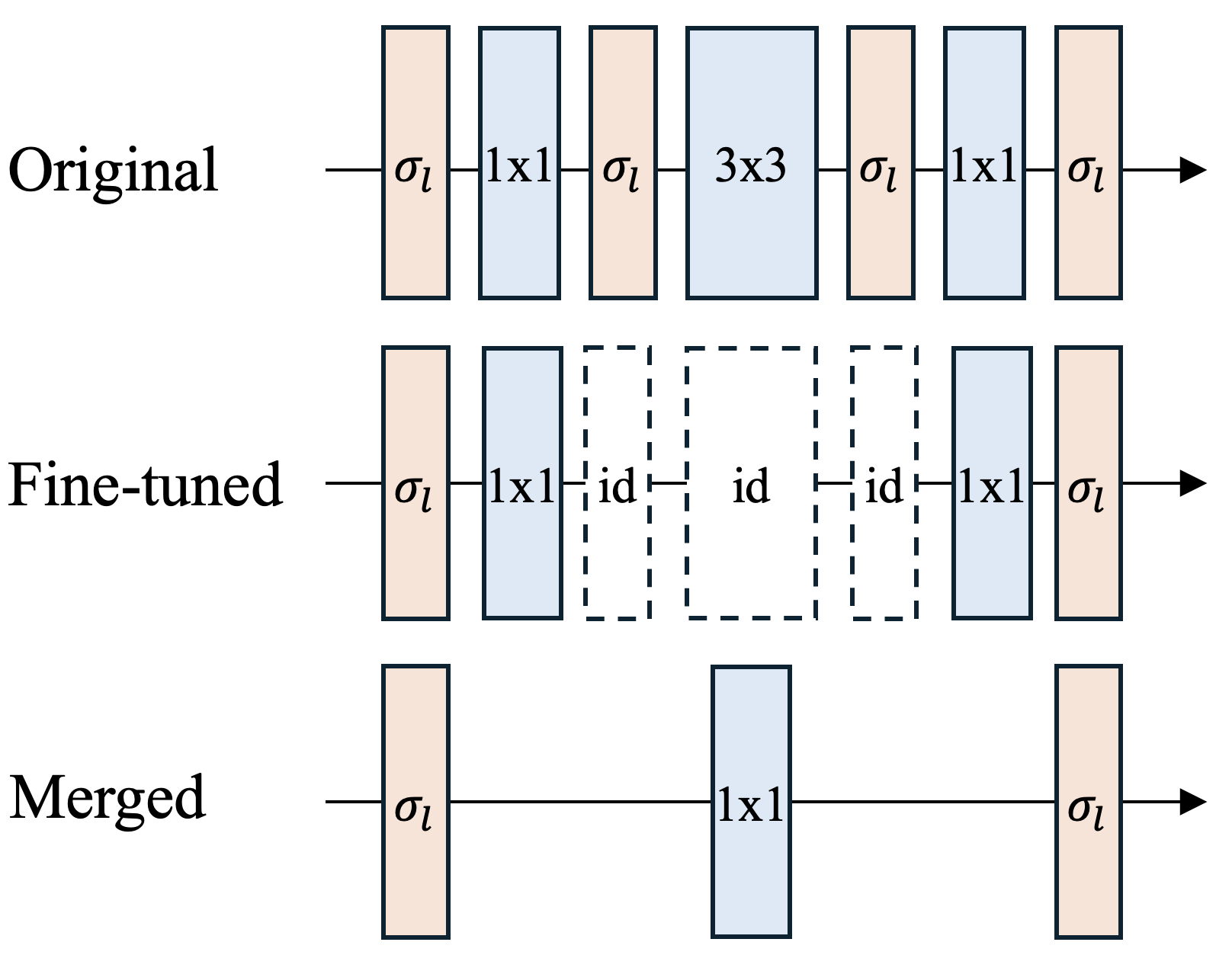

Jinuk Kim*, Yeonwoo Jeong*, Deokjae Lee, Hyun Oh Song

ICML 2023

Paper |

Code |

Project page |

Bibtex

|

|

Jang-Hyun Kim, Jinuk Kim, Seong Joon Oh, Sangdoo Yun, Hwanjun Song,

Joonhyun Jeong, Jung-Woo Ha, Hyun Oh Song

ICML 2022

Paper |

Code |

Bibtex

|

|

|

| I own a SO100 robot, which I sometimes play with and teach to do things. |

|

Talk-to-President is a service that allows you to chat with AI personas of the presidential candidates in the voice of the candidates. |

|

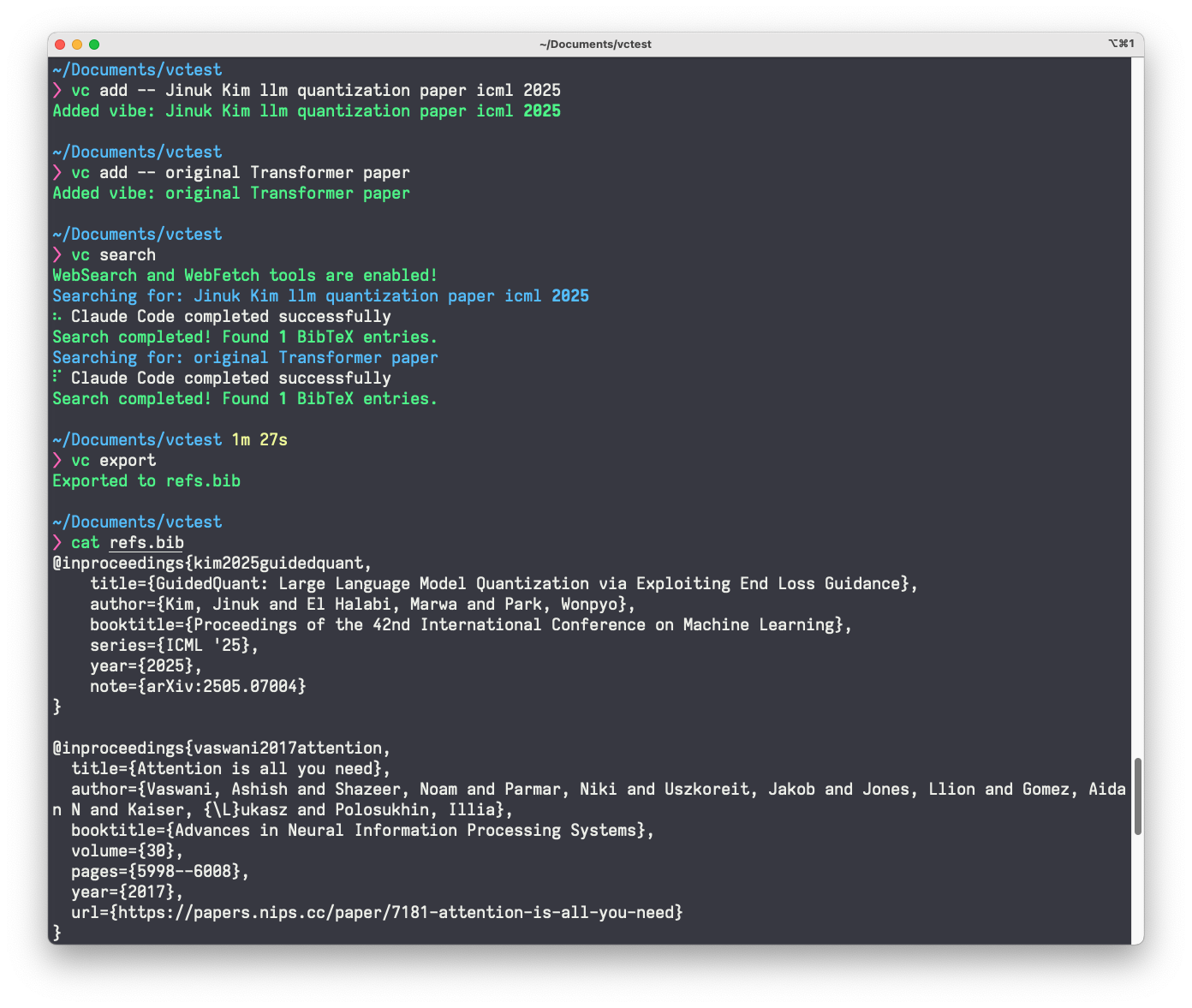

VibeCite uses your Claude Code to search and generate bibtex entries from your high-level paper descritions. |

|

SNU Board is an Android/iOS service which collects notices from website of SNU departments and gather them (Android / Aug 2021 / 100+ MAU / 80+ WAU / 1000+ Downloads). |

|

|

Last updated: September 19, 2025. Template based on Jon Barron's website. |